Seattle U Changes Academic Integrity Policy to Address Artificial Intelligence

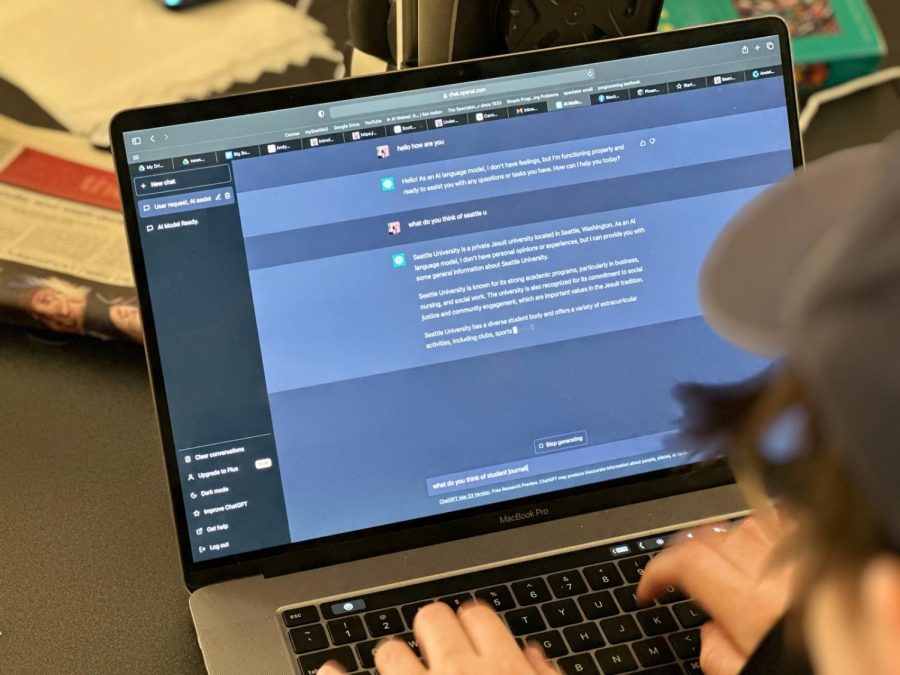

A student uses ChatGPT.

As Redhawks returned from spring break and prepared for the upcoming quarter, an email from Seattle University’s Office of the Registrar awaited in their inboxes. The email, sent March 27, announced an update to the university’s academic integrity policy in light of a recent trend of students using ChatGPT, an AI chatbot, to complete assignments. The document informed students about potential policy and syllabi changes to be implemented by some faculty members and professors and redefined what constitutes plagiarism and cheating.

But some faculty feel that the statement misses the root of the issue. Beatrice Lawrence, a theology and religious studies associate professor, believes the updated policy will not negate cheating and does not respond to the concerns professors have about AI.

“The statement that came out has no teeth [because] there is no way to enforce it,” Lawrence said. “I hope the school will find a way to support faculty in addressing the potential for cheating with ChatGPT through some practical means.”

Created by OpenAI, ChatGPT was launched with the company’s GPT-3.5 prototype in Nov. 2022 until the newest GPT-4 model was released March 14. Using readily available information from the internet, the chatbot completes various tasks according to the user’s requests, including solving math equations,writing essays and coding. The AI has its shortcomings and users report false information and human biases inserted into creative works. Additionally, several U.S. school districts raised concerns about students using ChatGPT to generate assignments and submitting them as their own work. Seattle Public Schools banned the technology last December, citing a need to discourage their students from cheating and stimulate creative thought.

Seattle U is divided on the ethics and integrity of using ChatGPT and other AI programs as a result of the update. While posing AI-generated works as one’s own is considered cheating, using it to brainstorm ideas and edit assignments is not covered by the policy. Pierce Harriz, a third-year computer science major, uses the program to help create talking points and outlines for assignments, which saves them time. Harriz understands why professors are wary of the program, as students could submit plagiarized work as their own.

“For brainstorming and a lot of those beginning steps, it is 100 percent useful, but it crosses the line when it comes to plagiarism because that is what a language model [does],” Harriz said. “It takes other people’s works and combines it all into one—when you ask it a question, it pieces [those works] together.”

According to Rick Fehrenbacher, the vice provost for academic technology and innovation, the policy and application of ChatGPT is an ongoing project administrators are working on to satisfy community expectations. In the meantime, the university is planning workshops and providing resources to educate professors about the new academic world emerging from AI.

“The fact of the matter is people are actually going to use [ChatGPT] for these tasks,” Fehrenbacher said. “What we have to do is think about what we don’t want ChatGPT to be doing and what we want our students to use ChatGPT for so that they can do more.”

Across campus, professors are experimenting and adjusting their curriculum to prevent students from using ChatGPT in class. Lawerence limits electronics usage and assigns oral or handwritten work to her students. Students who have become used to online assignments or students who have accessibility concerns, however, may find the adjustment more difficult.

“I do think it will be difficult for some students, and my job is to help them,” Lawrence said. “I knew there were [going to be] accessibility issues and I am currently working with the disability services office to come up with a way for students to complete [my] work in a way that isn’t a hardship.”

Students in the College of Science and Engineering have also witnessed a change within their curriculum. Harriz notes one professor has adopted new policies requiring students to be able to explain their work.

“The professor [would] randomly ask you to come by his office, [where] he takes your homework assignment and asks, ‘explain how you wrote this.’ If you are not able to explain why you wrote it or [why it is] different from the last submission, you might be caught and called out on using AI,” Harriz said.

The academic world is currently undergoing a metamorphosis as AI technology evolves at a rapid pace, leaving students, professors and administrators scrambling to keep up. Only time will tell how AI programs will be used or excluded in the classroom environment.