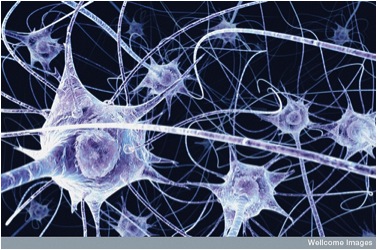

I’m going to really nerd out this week. I went to a lecture on the mathematical modelling of mouse neuronal networks this last Thursday, and my mind is officially blown. Scientists at the Allen Institute for Brain Science, in conjunction with a lot of other institutes in Europe and Asia, are working on describing and predicting neuronal firing patterns in brains. One neuron is complicated enough; now add all the connects the neuron makes with other cells, how many neurons are in a whole system devoted to a task (like language production or sight), and then extrapolate that out to the whole brain. It’s enormously complex and gorgeous. Think about it like this: I’m here at my laptop, hitting specific keys on a keyboard, making little marks pop up on a screen that can be understood by someone else. You’re reading (and hopefully understanding) what I’m typing by integrating sensory input of seeing the text with what you know about grammar and vocabulary, along with all of your own rich history of memories and knowledge. How do you do it?! You’re incredible! Your brain is a fantastic piece of equipment, which is why are are so passionate about finding out how it works.

The basic model for cognition is this: sensory input (marks on a screen) > processing (your brain integrating the meaning and context of the marks) > output (reading something and understanding it). Each step is mediated by action potentials, or energy spikes/signals in an individual neuron, in specific systems of neurons devoted to tasks. We are making progress in understanding and modeling what factors go into each step of cognition: an action potential, a system or cluster of neurons, and finally the whole picture of millions of neurons working together to produce consciousness. Dr. Nicholas Cain from the Allen Institute for Brain Science presented a fascinating look at how he and a team of mathematicians, biologists, and engineers are modeling how neurons fire in a mouse brain. Their project combines many different levels of modeling to give a larger, more precise view of describing firing patterns. First, they look at how to describe the smallest piece: individual neurons. What conditions can cause a neuron to fire? How often does this happen in specific conditions? What about input from other neurons? Our current understanding of neurons is that the receiving cell (cell B) takes in information from tons of other connections with other cells; some cells may send tons of signal, while others may send only a little. Cell B won’t fire an action potential unless enough input from other neurons tells it to. Dr. Cain and his team use a

relatively well-known function to predict individual neuron activity: leaky integrate-and-fire (LIF) modeling (1). The model says that once enough input is received, the threshold for initiating an action potential or “spike” is reached and the cell fires. Afterwards, the voltage is reset to the original resting state; this means there is a refractory period that prevents a cell from firing in a range of time, which must be accounted for when extending the model out to systems of neurons. The second level to model is a population of neurons. A population may be responsible for a specific function, like vision or hearing things. Population models take into account tons of input from tons of neurons all connected to each other via complicated equations that use general models like LIF to integrate into large scale models to describe the whole population of neurons. In this project, the team used DIPDE (integro-partial differential equation with displacement (2)) to describe network firing. Fascinatingly, the model could predict steady states (equilibrium) of neurons amazingly accurately; much more accurately than some of the other most popular methods for modeling population neurons.But of course, there’s much more to consider. The third level is to model the whole shabang. How do I process catching a ball, or how do you read? What connections are occurring to make that possible? The techniques used for this step are numerous and often integrate a lot more biology with the math. Some models look at everything; the biology of the neuron (one of my favorite parts), up to the population, up to the large-scale activity in a brain. This is referred to the study of whole neural networks, or connectomes. Mathematical modeling at this level is hard to do; when we include every small variable, it takes a lot of time to simulate a few milliseconds. Multiply that by hundreds of trials, and we are looking at years of research to test a single simulation. Therefore, we sometimes reduce and simplify activity to give us a general idea of what’s going on so we can apply it to other concepts more readily. Once all the code writing and simulations are done, how do we test how well our models predict neuronal activity? There are many methods for looking at energy allocation in the brain. The Allen Institute team uses a technique that involves injecting a viral vector to monitor and tag active connections in the brain (4). Sadly, it can’t be used to detect activity in living brains, but it gives a surprisingly accurate and repeatable look at how neurons are talking with each other. They can compare the activity produced by the simulation and compare it to the activity seen in a life model to gauge how good their math is, and it often has global predicting power. Our models are not exact, and there is still a lot of work on getting more precise and detailed, but we’re leaps and bounds ahead of where we were a decade ago. New simulation techniques, software, and computing power and new developments in biotechnology have enabled us to look at the brain in a structured, elegant, and thoroughly magical(ly scientific) way. Science is moving in a integrated and multidisciplinary way, and the rewards are huge; I can’t wait to see what the field does next.

References: 1. http://www.cns.nyu.edu/~eorhan/notes/lif-neuron.pdf 2. http://www.ploscompbiol.org/article/info%3Adoi%2F10.1371%2Fjournal.pcbi.1003248 3. http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3107581/ Thanks to Dr. Cain and the mathematics department here at SU for putting on the presentation! Extra Links: The Allen Brain Atlas — The atlas is an extensive genomic and neural connection database for mouse and human brains.–http://www.brain-map.org/ BRIAN simulator–This simulator runs neural network simulations!– http://briansimulator.org/