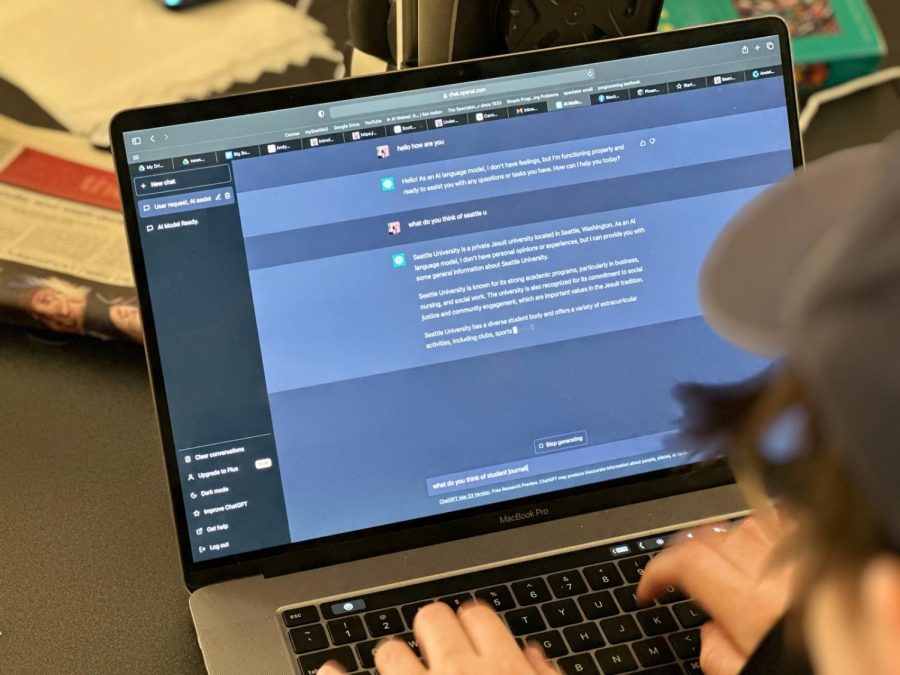

With recent advancements in artificial intelligence (AI) technology, and pop culture teeming with stories of robotic uprising and man versus machine (“I’m sorry Dave, I’m afraid I can’t do that,”) it may seem as though our developing technology has been making breakthroughs at an alarming pace. From the birth of ChatGPT to Neuralink having its first person successfully receive a brain implant, recent rapid progress has generated conversations about the anxieties surrounding AI and even doomsday predictions.

However, while the high-profile releases in the past few years have seemed nothing short of exponential, the history of technology leading up to the current AI boom is an inextricable component of its current landscape. Associate Teaching Professor of Philosophy Eric Severson contextualizes AI on a continuum of technology–not only including the development of computing, but of the relationship between humans and tools as a whole. Engaging with that history is a crucial component of understanding AI’s role in our world today.

While the uneasiness around the capabilities of AI can be of valid concern, technological advancements have always faced a degree of polarization due to a lack of understanding.

Max Tran, a second-year computer science major, is the president of the Artificial and Intelligent Machine Learning Club (AnIMaL) and emphasized that AI is currently being used as a blanket term, which makes it difficult to differentiate the variance of technology.

“I think the machine learning side is being hidden by the marketing side of AI and generative AI. I think that is where part of the confusion and ambiguity comes from because we’re covering up the actual terms and it’s making it harder to figure out what this is,” Tran said.

Tran went on to explain that machine learning is related to AI, but that not all types of AI being marketed as such are AI by definition and rather fall into the subcategories of machine learning.

What’s the main difference? Machine learning lacks intelligence and is only able to detect patterns based on data using math based algorithms. Tran believes that equating machine learning and AI can be greatly misleading, especially because the criteria of the two is constantly evolving.

Ensuring that perceptions of generative AI are definitionally correct so that users have the tools to properly understand new advancements is critical, but also thinking about its practical functions and the way it will slowly become more integrated into daily lives is another aspect of discussion.

Bryan Kim, a second-year computer science major and event coordinator for AnIMaL, thinks that suddenly having access to the Apple Vision Pro and the AI Pin may feel dystopian to a majority of people, but has the potential to improve accessibility for those that may need more assistance in everyday life.

As proven by our relationship with smartphones, Kim believes that with time we will become more reliant on technology utilizing AI. However, finding a balance between skepticism and receptivity is essential.

“Awareness is huge. Just knowing how it works changes a lot of how you view AI. Have an open mind but also have discernment,” Kim said. “Society is going to change and we should expect that, but thinking about implications can help generate conversation.”

Although some of the fear surrounding AI can stem from irrational notions, there have been instances where generative AI has done genuine harm, often through perpetuating harmful stereotypes and prejudices based on seemingly neutral prompts.

When the Washington Post requested a depiction of “a productive person,” it generated white men dressed in suits. Yet, when asked to generate an image of “a person at social services,” it mainly depicted people of color. Similar racially biased images were produced when asked to generate images of routine activities and common personality traits.

Severson raised concerns about how tools—including, but not limited to, AI—exist in the context of their society. Especially when that society maintains socioeconomic inequities or other forms of oppression, those same problems can be internalized and reproduced with the tools themselves.

“When we develop new tools in a sexist society, we should expect that they subtly and invisibly exacerbate the privileges experienced by men. In a white supremacist society, tools that we develop—with or without anyone’s intentional effort—will often subtly or directly exacerbate the oppression of people of color,” Severson said. “What we need to be aware of every time we make or take up a tool is that we do it in a society that is already bent away from justice. Tools are not neutral.”

He compared the phenomenon to the history of medicine, wherein tools were developed among and with a particular demographic of young, college-educated men in mind—a history that still perpetuates medical discrimination to this day.

“Racialized outcomes in health care, education and criminal justice are really only explainable by systemic preferences that are carried without anyone’s direct intention. Racism and sexism do not require intentionality to flourish. They flourish nonetheless,” Severson said.

Similarly, if AI were to be continually developed without accounting for how it responds to and impacts existing social issues, it would continually perpetuate those unexamined problems. Severson emphasized that AI does not simply help its users learn the information they request, but shapes the way they learn and interact with the world epistemically.

Whether it be in classrooms or club meetings on the Seattle University campus, or the relationship between the self and society on a large scale, raising questions about the ethics of AI remains at the forefront of the current conversation.